所有节点 绑定Hosts

1

2

3

4

5

6

7

|

[root@Perng-Node2 ~]

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.159.100 Perng-Master

192.168.159.110 Perng-Node1

192.168.159.120 Perng-Node2

|

所有节点 安装Docker

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

|

所有节点 配置K8s环境

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@Perng-Master ~]

|

Master节点 安装k8s组件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

|

Node节点安装K8s组件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@Perng-Node1 ~]

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@Perng-Node1 ~]

[root@Perng-Node1 ~]

[root@Perng-Node1 ~]

[root@Perng-Node1 ~]

|

Master节点 拉取K8s镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

[root@Perng-Master ~]

k8s.gcr.io/kube-apiserver:v1.22.3

k8s.gcr.io/kube-controller-manager:v1.22.3

k8s.gcr.io/kube-scheduler:v1.22.3

k8s.gcr.io/kube-proxy:v1.22.3

k8s.gcr.io/pause:3.5

k8s.gcr.io/etcd:3.5.0-0

k8s.gcr.io/coredns/coredns:v1.8.4

[root@Perng-Master ~]

kubeadm config images list --kubernetes-version=v1.22.3

set -e

KUBE_VERSION=v1.22.3

KUBE_PAUSE_VERSION=3.5

ETCD_VERSION=3.5.0-0

CORE_DNS_VERSION=v1.8.4

GCR_URL=k8s.gcr.io

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/google_containers

images=(kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${CORE_DNS_VERSION})

for imageName in ${images[@]} ;

do

docker pull $ALIYUN_URL/$imageName || docker pull coredns/coredns:1.8.4

if [ ${imageName} != "coredns:v1.8.4" ];

then

docker tag $ALIYUN_URL/$imageName $GCR_URL/$imageName

else

docker tag $ALIYUN_URL/$imageName $GCR_URL/coredns/$imageName

fi

docker rmi $ALIYUN_URL/$imageName || docker rmi $imageName

done

echo

echo "docker pull finished..."

:wq

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

|

各Node节点载入镜像

1

2

| [root@Perng-Node1 ~]

[root@Perng-Node1 ~]

|

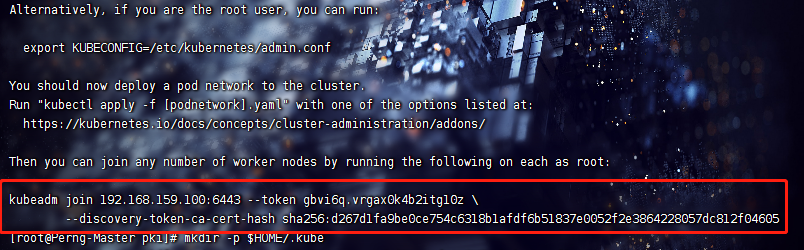

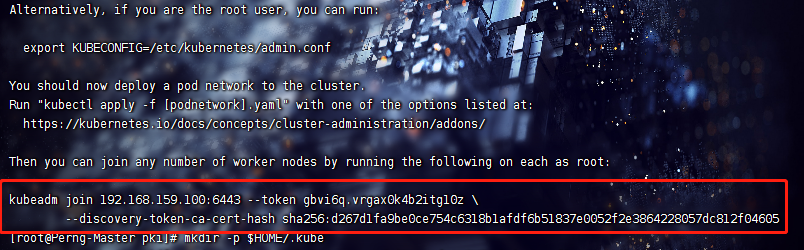

Master节点初始化

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

[root@Perng-Master ~]

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version=v1.22.3 \

> --service-cidr=10.1.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

|

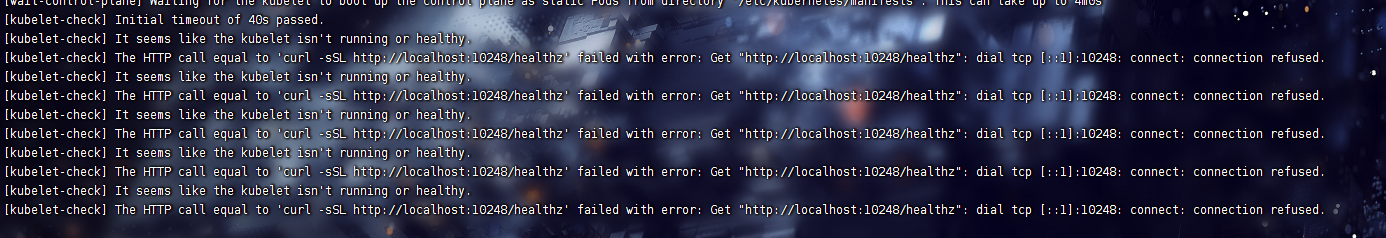

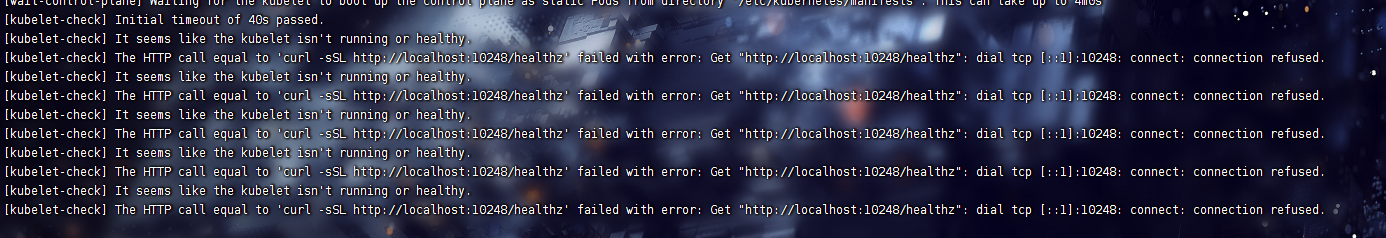

如果初始化过程当中或加入集群过程中 出现访问localhost:10248超时,以下为处理办法

1

2

3

4

5

6

7

|

[root@Perng-Master pki]

{"exec-opts": ["native.cgroupdriver=systemd"] }

[root@Perng-Master pki]

|

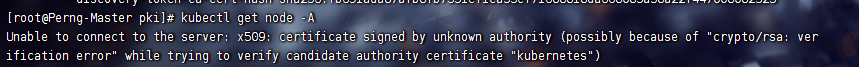

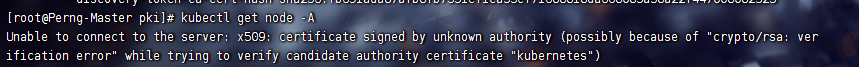

如果出现证书错误,以下为处理方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version=v1.22.3 \

> --service-cidr=10.1.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

[root@Perng-Master ~]

[root@Perng-Master ~]

[root@Perng-Master ~]

|

由于集群重置,每个Node都需要重新假如集群

1

2

3

4

5

|

[root@Perng-Node1 ~]

[root@Perng-Node1 ~]

|

Master节点安装Flannel网络插件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

| [root@Perng-Master ~]

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

seLinux:

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.16.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.16.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

:wq

[root@Perng-Master ~]

[root@Perng-Master ~]

|

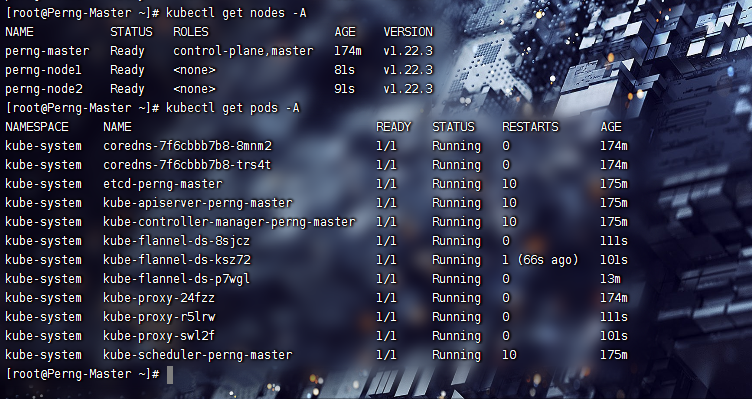

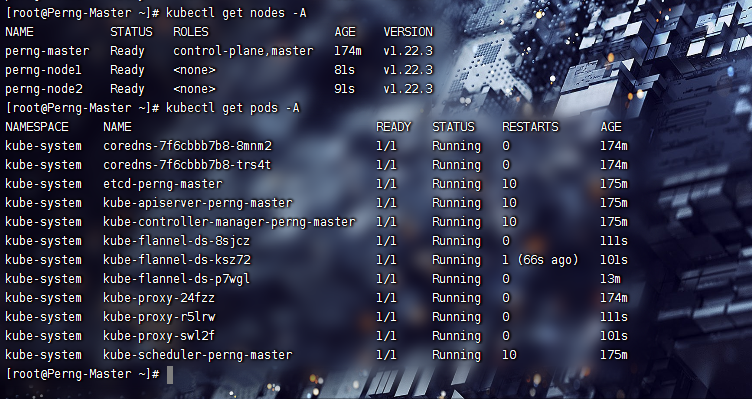

验证

如下即可